Computer Vision (CV), Explainable AI, A/B Testing

Project Overview

The proposed computer vision application was an AI-powered system designed to monitor customer emotions in a restaurant setting, classifying expressions of laughter and distress (crying) through image recognition. The goal of the system was to improve customer service responsiveness, enhance the dining experience, and enable real-time monitoring of customer satisfaction. By analyzing emotions, the system could confirm when a customer was visibly happy or distressed, automatically alerting restaurant staff to check on a guest if signs of distress were detected. This proactive approach aimed to ensure issues were swiftly addressed, offering an added layer of attentiveness. The overall objective was to make customer service more efficient and responsive, supporting restaurants in delivering a unique, attentive dining experience. Market research highlighted a growing interest in customer experience technology, especially as restaurants sought to stand out in a competitive market through superior service.

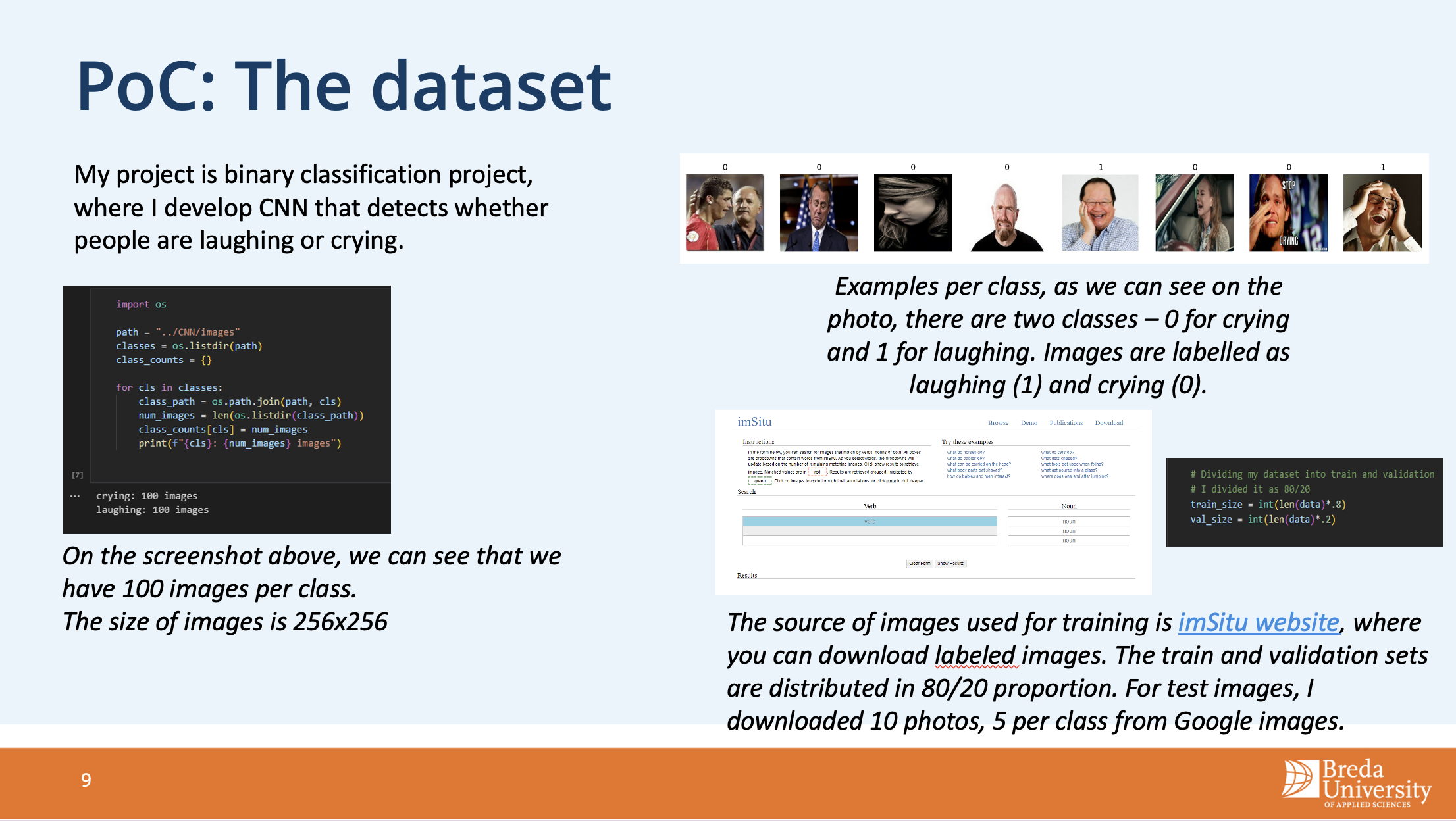

Dataset Selection and Data Augmentation

For this project, the ImSitu Dataset, developed by Mark Yatskar, Luke Zettlemoyer, and Ali Farhadi, was selected due to its capacity to support situational recognition tasks in image classification. A data augmentation pipeline was defined using Keras to enhance the dataset. This pipeline applied various transformations, such as random horizontal flipping, rotation, zooming, contrast shifts, and brightness adjustments, to create diverse versions of the original images. This augmentation increased the model’s ability to generalize to new data. The augmentation pipeline was then applied to both training and validation datasets through the .map() function, ensuring each batch of images received these transformations.

Model Architecture and Training

The model architecture was designed with separable convolutional layers for feature extraction, followed by batch normalisation and max pooling layers. For the classifier, fully connected layers with dropout were implemented to mitigate overfitting, concluding with a sigmoid activation layer for binary classification (laughing vs. crying). Early stopping and model checkpoint callbacks were established to halt training once validation loss ceased to improve, ensuring that only the best model was saved. Experimentation with different learning rates was conducted by compiling and training the model using the Adam optimizer and binary cross-entropy loss function. After each training session, model accuracy was tracked to evaluate performance.

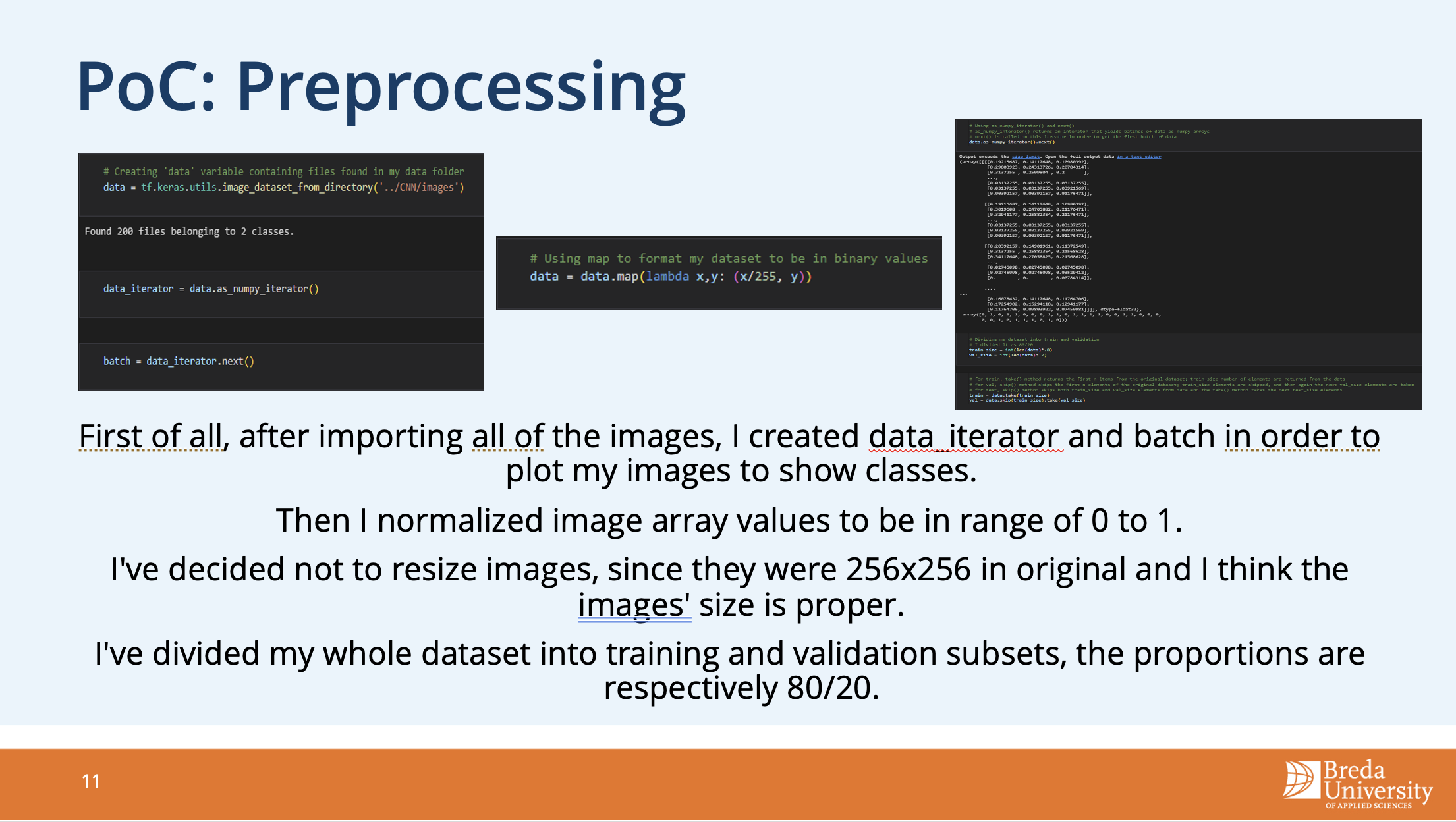

Preprocessing Techniques

After importing all images, a data iterator and batch were created to enable plotting and visualizing the images by class. Image array values were then normalized to be within the range of 0 to 1, enhancing model performance by ensuring consistent pixel value scaling. Image resizing was not applied, as the original image dimensions of 256x256 pixels were deemed suitable for this project. Finally, the dataset was split into training and validation subsets in an 80/20 ratio to facilitate effective model training and evaluation.

App (Prototype) Interface Design

The app interface was designed to be simple and efficient for end-users, specifically restaurant staff and managers. Real-time notifications, room overviews, and customer satisfaction histories were provided on the interface to support prompt and informed responses to customer needs. The system also allowed staff to manually label ambiguous expressions, saving images for verification if needed. This manual labeling capability was intended to improve usability and increase the app’s effectiveness in fast-paced environments. For prototyping purposes, proto.io was used.

Risk Mitigation

Several potential risks associated with implementing this technology were identified, including operational risks that could disrupt the dining experience, legal risks around data privacy, and quality risks in cases where the AI made incorrect predictions. To mitigate these risks, the project prioritized system reliability, encrypted sensitive data, and incorporated ongoing improvements to the model based on user feedback. Continuous learning capabilities were built in, allowing staff to manually label uncertain predictions. This feedback helped refine the model’s accuracy over time, as it adapted to real-time footage and continuously improved.

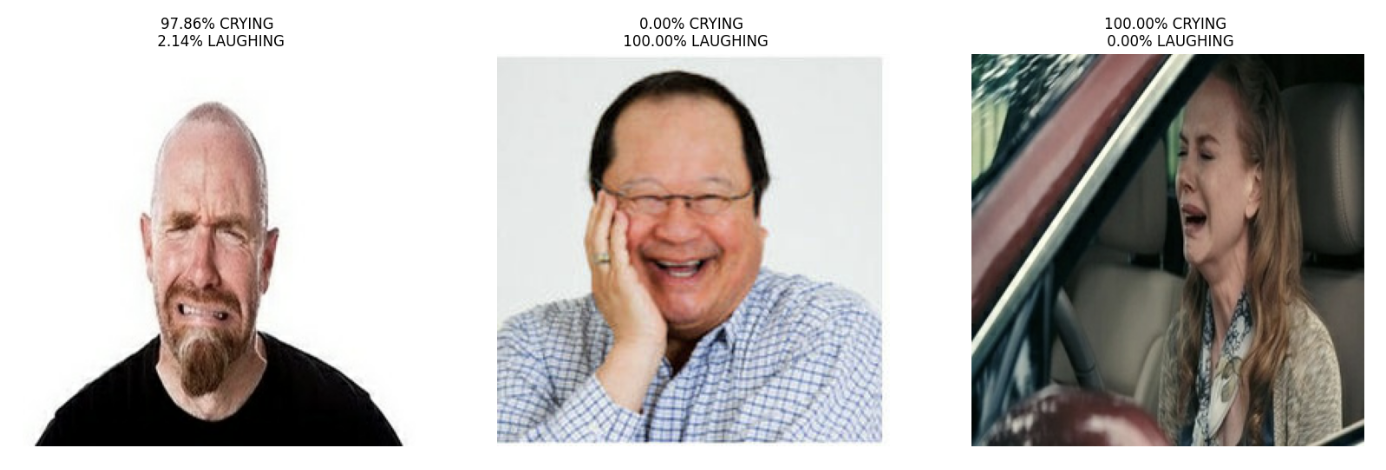

Project Results

The project successfully integrated AI-powered computer vision to automate the monitoring of customer emotions, distinguishing between laughing and crying expressions with accuracy. Through deep learning models, the system provided effective emotion recognition and real-time notifications, alerting staff to any signs of customer distress. These features supported a high standard of customer care and enabled a responsive, flexible approach to emotion-based service in the restaurant setting.