Computer Vision (CV), Machine Learning (ML), Segmentation

In our study, we developed a multi-disciplinary pipeline integrating computer vision, reinforcement learning, and robotics to segment plant roots and control a liquid-handling robot for targeted inoculation of plant roots. NPEC aimed to automate the process of plant inoculation, enhancing the speed, precision, and reproducibility of this critical research step, thus conserving time and resources.

I’d like to share my journey, highlighting the obstacles faced and the strategies I used to overcome them.

Defining the Challenge

Plant phenotyping focuses on accurately measuring the physical and biological characteristics of plants, particularly how they interact with microbial communities. Even minor measurement errors can impact research findings, so our goal was to build a reliable system to identify and measure root structures in diverse environments accurately.

A Comprehensive Approach

Our solution involved three integrated components: computer vision, robotics, and reinforcement learning - each vital for creating a pipeline that could detect root tips and analyze their growth patterns accurately.

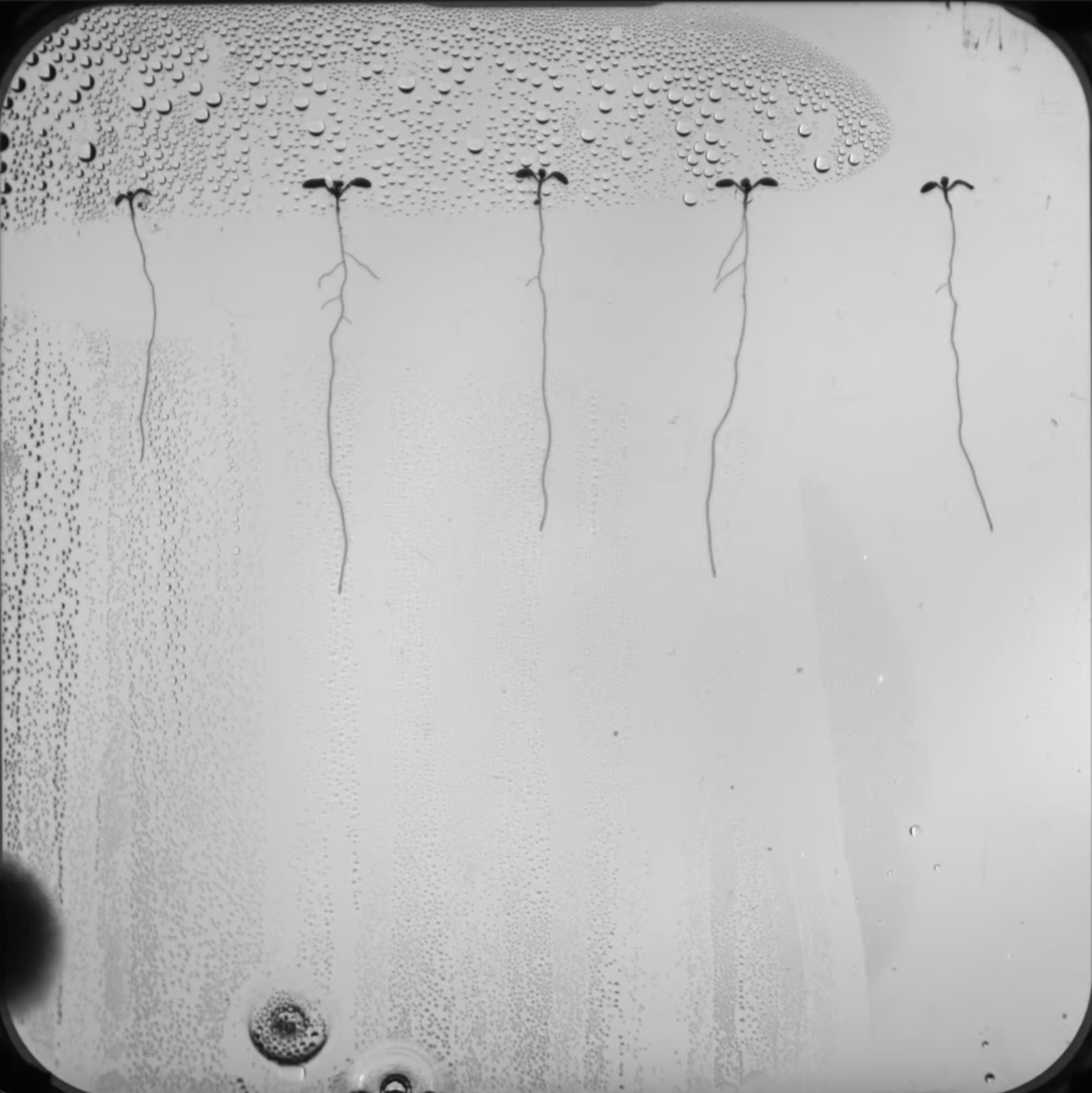

Developing the Computer Vision Pipeline

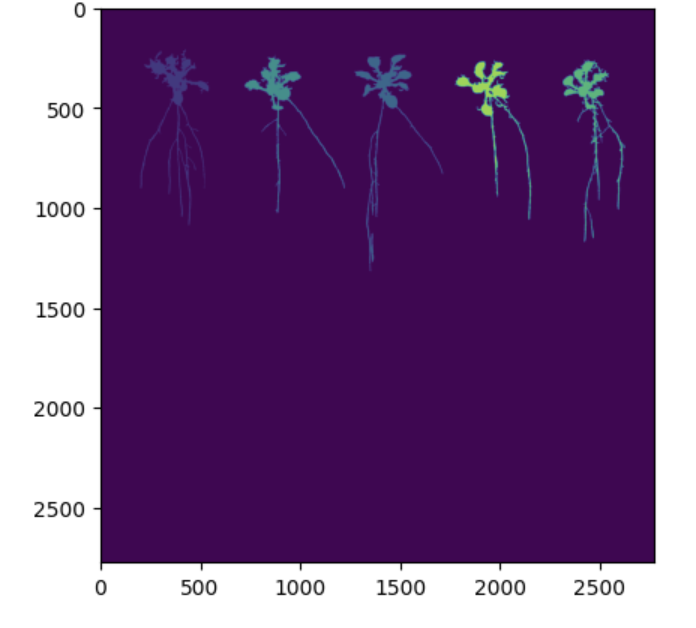

The first step involved designing a robust computer vision pipeline. I tested various segmentation models to optimize the accuracy of root tip detection. This was a critical component, as visualizing and measuring root structures underpins the understanding of plant-microbe interactions. Through model iterations and optimizations, I met NPEC’s accuracy standards, producing precise and reliable root segmentations.

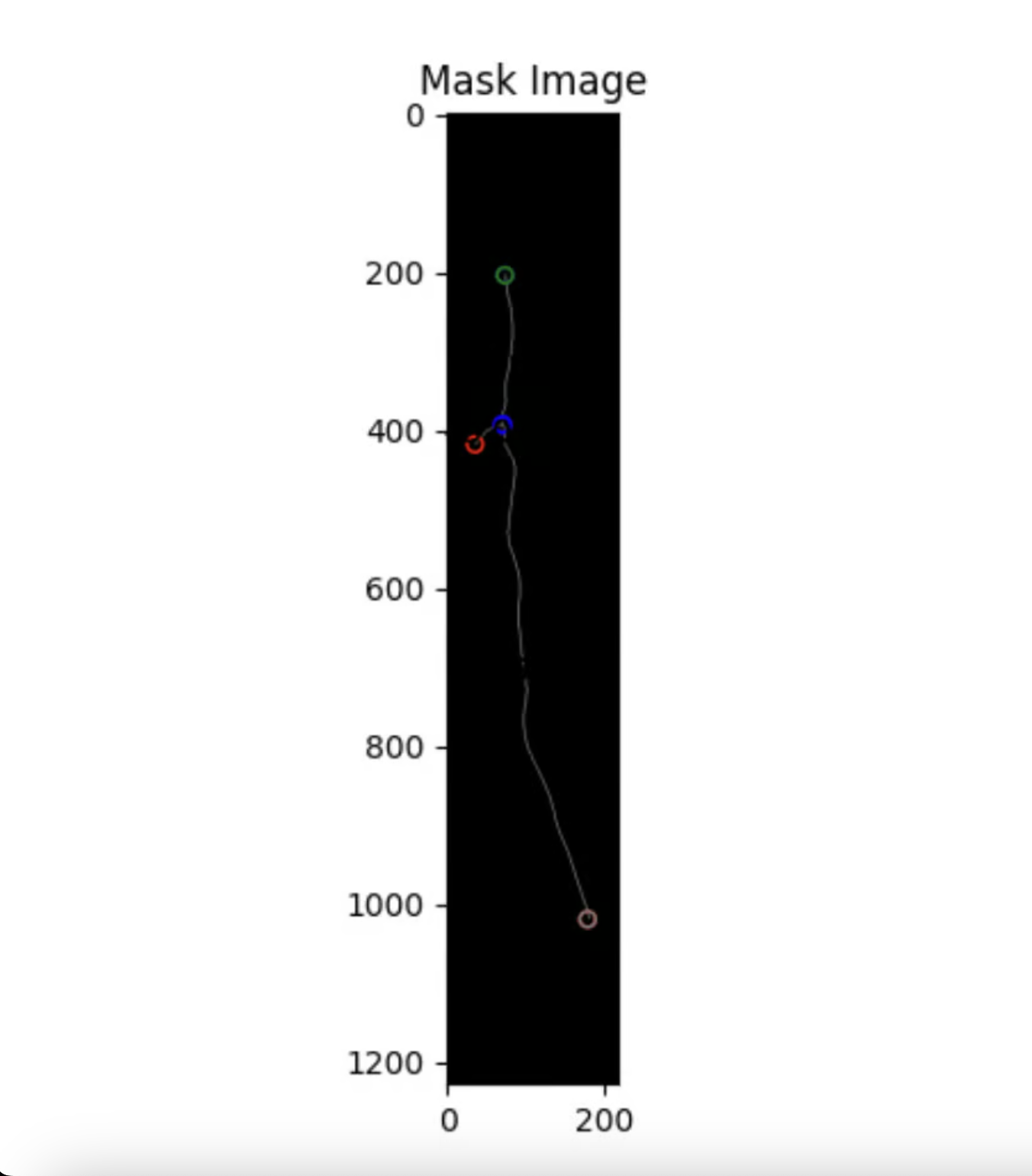

Root Landmark Detection

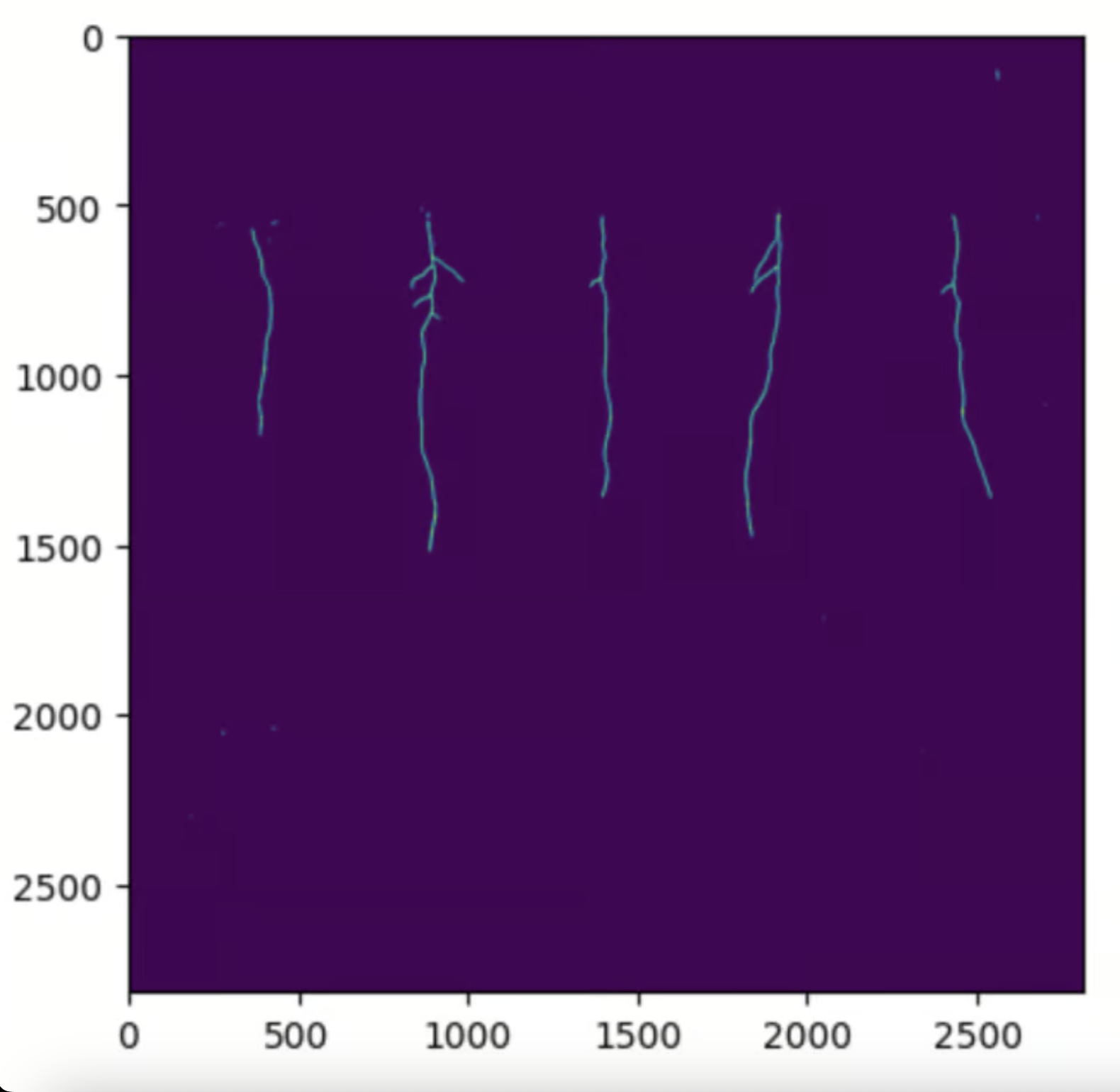

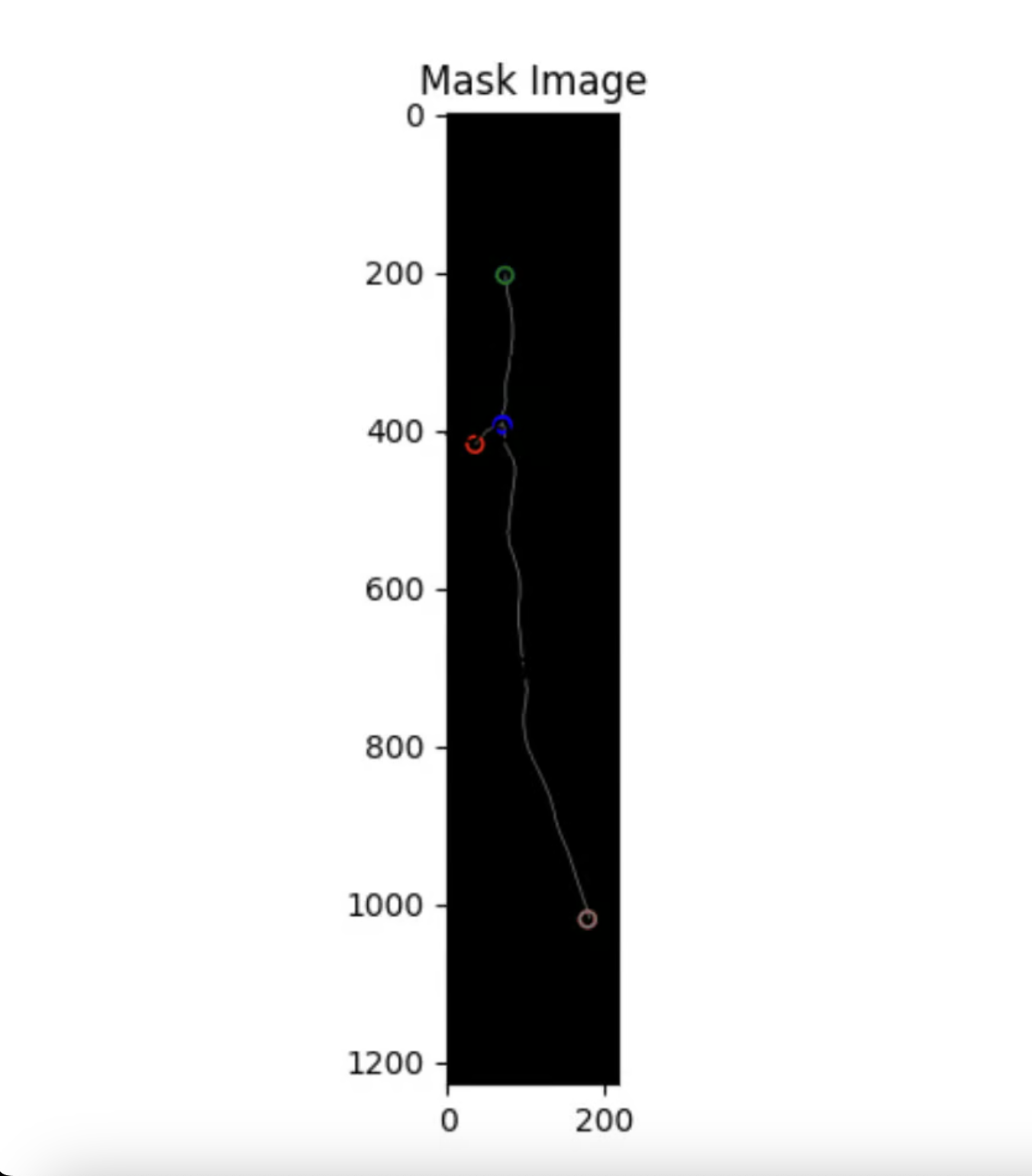

The instance segmentation results from the deep learning model provided accurate representations of plant roots. Using these segmentations, I conducted landmark detection, pinpointing specific points on the roots that could be used in a simulation environment to facilitate training for the inoculation process.

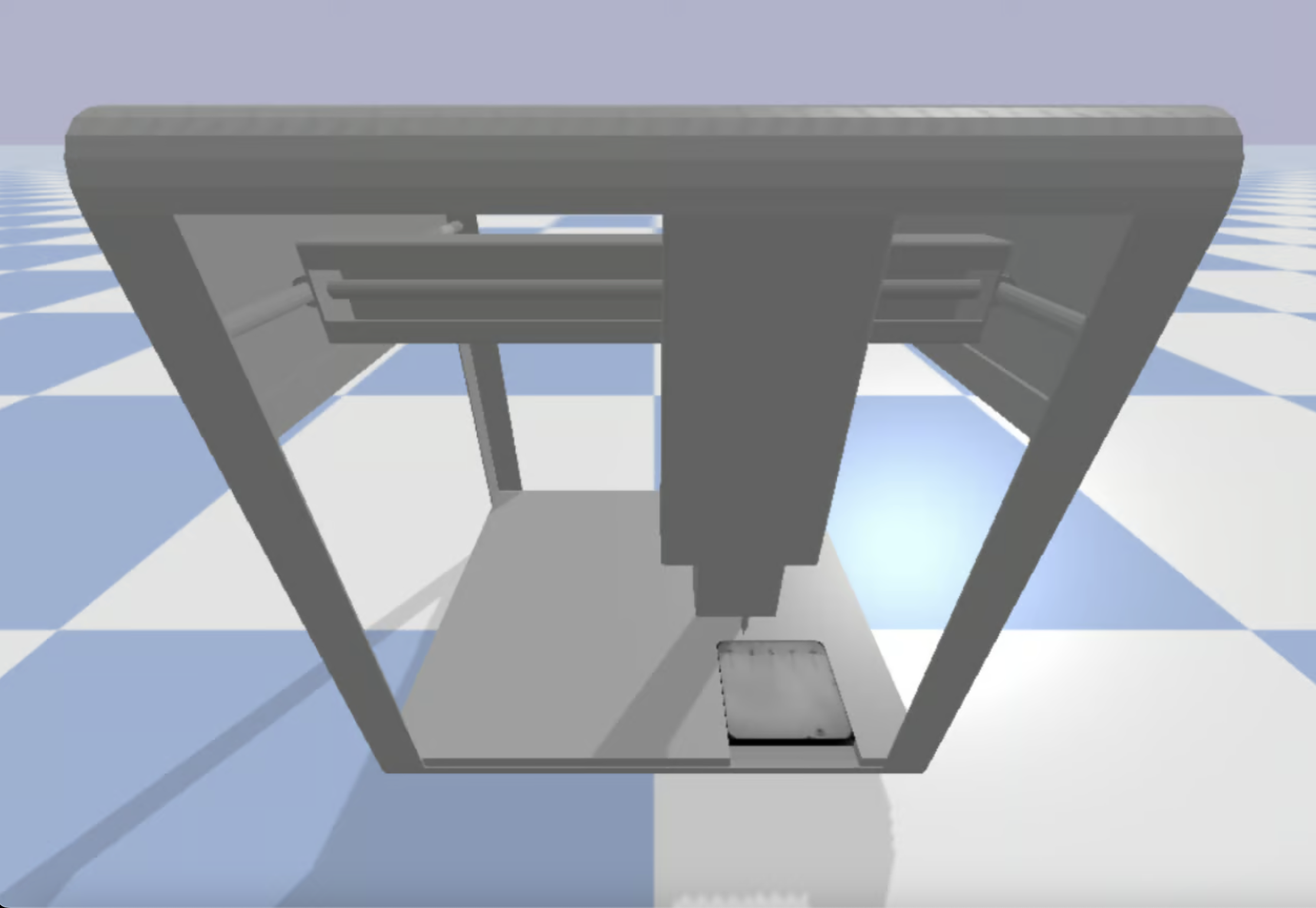

Creating a Simulation Environment

To facilitate testing, I built a virtual environment using PyBullet, a physics simulation tool. This environment enabled us to observe the robotic system’s movement, ensuring it could reliably reach key locations in the workspace. The simulation consistently yielded accurate results, proving foundational for later stages.

Integrating Reinforcement Learning

With the computer vision and simulation components working well, I developed a wrapper for the Gymnasium framework, allowing integration with Stable Baselines 3 for reinforcement learning. This reinforcement learning model enabled the robot to identify and move to specific target locations within its workspace. Remarkably, it achieved an accuracy of within 1mm, a significant success.

Exploring PID Control as a Baseline

I also implemented a PID (Proportional-Integral-Derivative) controller to compare against the reinforcement learning approach. While the PID controller provided a reasonable 6mm accuracy, it did not match the precision of the reinforcement learning model. This comparison demonstrated the advantages of machine learning for precise robotic control in this context.

System Integration into a Comprehensive Solution

The final project stage involved integrating the computer vision pipeline, reinforcement learning model, and PID controller into two comprehensive systems. A significant challenge was translating pixel coordinates from the vision output into real-world measurements. I addressed this by developing a formula to convert between the coordinate systems, enabling the seamless operation of the integrated systems.

Key Findings and Future Directions

This research highlighted that the reinforcement learning model outperformed traditional control methods, underscoring its potential value for future plant-microbe studies. Our findings demonstrated that combining conventional computer vision with advanced machine learning techniques allows for precise root measurements.

There remains potential for further optimization. Fine-tuning PID controller parameters and experimenting with reward functions in the reinforcement learning model could further refine accuracy and performance.

Conclusion: A Learning Experience

This project has been an enriching journey, deepening my appreciation of how advanced technology can propel plant science. From building a computer vision pipeline to integrating diverse systems, I gained invaluable insights into the complexities of plant-microbe interactions and the promising applications of technology in this field.